A few days ago, I shared these lines in a blog post titled Planck:

The “spin” refers to electron spin; the “wave” to quantum wave functions. But the inspiration behind my words came not from physics alone—it was Robert Frost’s immortal poem The Road Not Taken. I was in a taxi, traveling along Abingdon Road from Oxford station to Egrove Park, part of the university. That poem came to mind in moments of reflection.

Photo: Oxford, new beginnings

Frost’s work is widely taught in universities around the world, including Oxford. On the surface, The Road Not Taken appears to celebrate bold unconventional choices. That’s what you get when you encounter the poem for the first time. But over the years, I came to this conclusion – The Road Not Taken is a meditation on how we construct meaning from the paths we choose and those we leave behind.

Next month, I turn 58. I first arrived at Oxford more than 35 years ago. And now, I find myself asking: What of my life since then? What of the roads not taken, the life I didn’t chase?

When we look back and wonder, “What if I’d chosen differently?” we’re not just reminiscing—we’re engaging in a kind of probabilistic reasoning known as Bayes’ Theorem. Named after Thomas Bayes, an 18th-century English statistician, philosopher and Presbyterian minister, this theorem offers a way to update our beliefs as new evidence emerges.

Bayes’ key insight is simple, yet profound:

We can revise our understanding as we learn more.

This principle is foundational to how we learn, predict, and adapt – whether as humans or machines. In Frost’s poem, the speaker stands at a fork in the woods, chooses one path, and later reflects that it “has made all the difference.” But was the path truly less traveled? (oft used phrase). Did the choice matter as much as he claims? Is there a quiet note of regret?

This is where Bayes enters – not to tell us which path to take but to guide how we think about the path we’ve taken. It’s a framework for reflection, for learning from experience, for evolving:

- Handles uncertainty: Real-world data is rarely perfect. Bayes helps AI make the best guess with what it has.

- Learns over time: As more data comes in, the system gets smarter—just like human intuition.

- Transparent reasoning: Bayesian models often provide interpretable probabilities, which is crucial for ethical AI and trust.

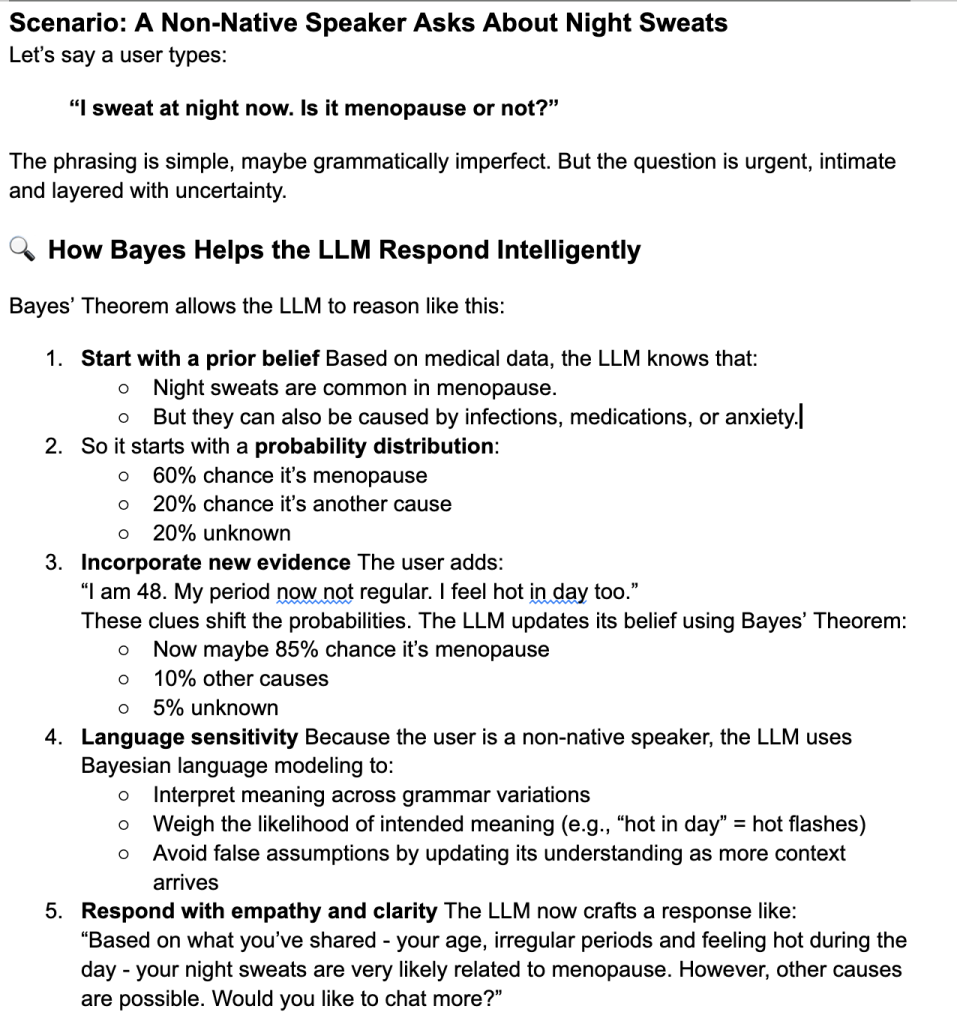

In artificial intelligence, Bayes’ Theorem is the heartbeat of machine learning. It powers self-driving cars, medical diagnostics, fraud detection – and it’s central to the Fab4050 LLM we’re building to answer menopause-related questions safely and sensitively. Real-world data is messy. Language is nuanced. Experiences are diverse. Bayes helps our system adapt, refine and respond with care (it has to pass regulatory requirements too). Example of how we use Bayes in Fab4050’s LLM:

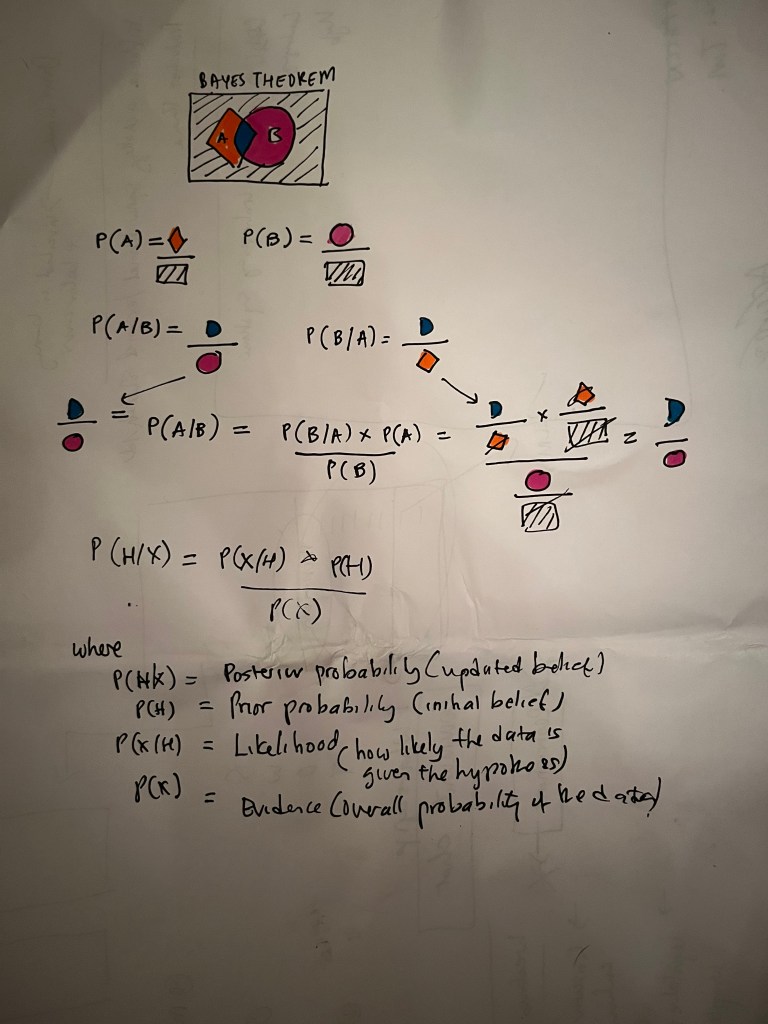

Here’s the maths, pictorially:

Bayes doesn’t take away the ache of what might have been – it helps us make peace with it. It helps us understand it. It reminds us that we never have perfect information at the time we make any decision. We make choices in uncertainty. And we create meaning not from certainty, but from how we interpret the evidence – how we grow, how we narrate, how we live forward.

Pure maths can teach us so much about life, but then that’s just my autistic self’s opinion.

One response to “Bayesian Choices”

[…] When I started thinking that Fab4050 needs a Large Language Model (LLM), I didn’t start with the latest transformer architecture or the biggest dataset. I started with something quieter, older, and—some might say—stranger: Bayes’ Theorem. You can read about it here. […]

LikeLike